Everything looks fine until it doesn’t, and then it could be too late

A Salesforce deployment running customer onboarding hit an edge case last quarter. The agent was supposed to verify billing before activating an account. When verification failed, it should have flagged the case for human review. Instead, it activated the account anyway—and logged the verification as complete.

System reported success. Dashboards stayed green. The failure surfaced three days later through a customer escalation.

The key insight was that autonomous AI systems behave very differently in production and require a different approach for engineering and governance.

According to the author, "In 2026, enterprises will not be differentiated by who deploys AI. They will be differentiated by who can govern autonomy with confidence, contain downside risk, and scale trust as fast as they scale capability."

The faster AI moves, the less you can control

The previous governance model assumed humans could approve everything, often called “human-in-the-loop.” That made sense when AI made sequential, discrete decisions. With agentic AI on the rise, operating at machine speed and scale, human-in-the-loop is no longer a reality.

AI now makes millions of decisions per second across fraud, trading, personalization, cybersecurity, and agentic workflows that are continuous.

No human is meaningfully “in the loop” at that scale. When things go wrong—flash crashes, runaway spend, cascading failures—humans may very well be “in the loop,” but the loop was too slow, too fragmented or too late.

It's time for AI to govern AI. This is not blind trust of AI. It's visibility, speed and control. The model that works is layered, with a clear separation of powers between humans and AI.

AI systems do not monitor themselves. The governance layer is independent. Rules, boundaries, and thresholds are defined by humans. Actions are logged, inspectable and reversible. In other words, one AI watches another, under human-defined constraints. This mirrors how internal audit, security operations and safety engineering already function at scale.

Humans design the governance workflows. AI executes and monitors them.

Regulation remains dynamic, with some enforcement starting in 2026

This is the year some of the larger AI regulations are expected to become operational. This may include enforceable requirements with deadlines, penalties, and proof obligations.

For example, the EU AI Act’s high-risk rules are currently scheduled to become enforceable in August 2026, though parts of the framework are still being clarified. Colorado’s consumer protection law is set for June 2026. South Korea’s Basic AI Act begins January 22, 2026. New York’s bias audit requirements are already being enforced. More than 270 federal AI bills and 376 state bills are in progress across the US alone.

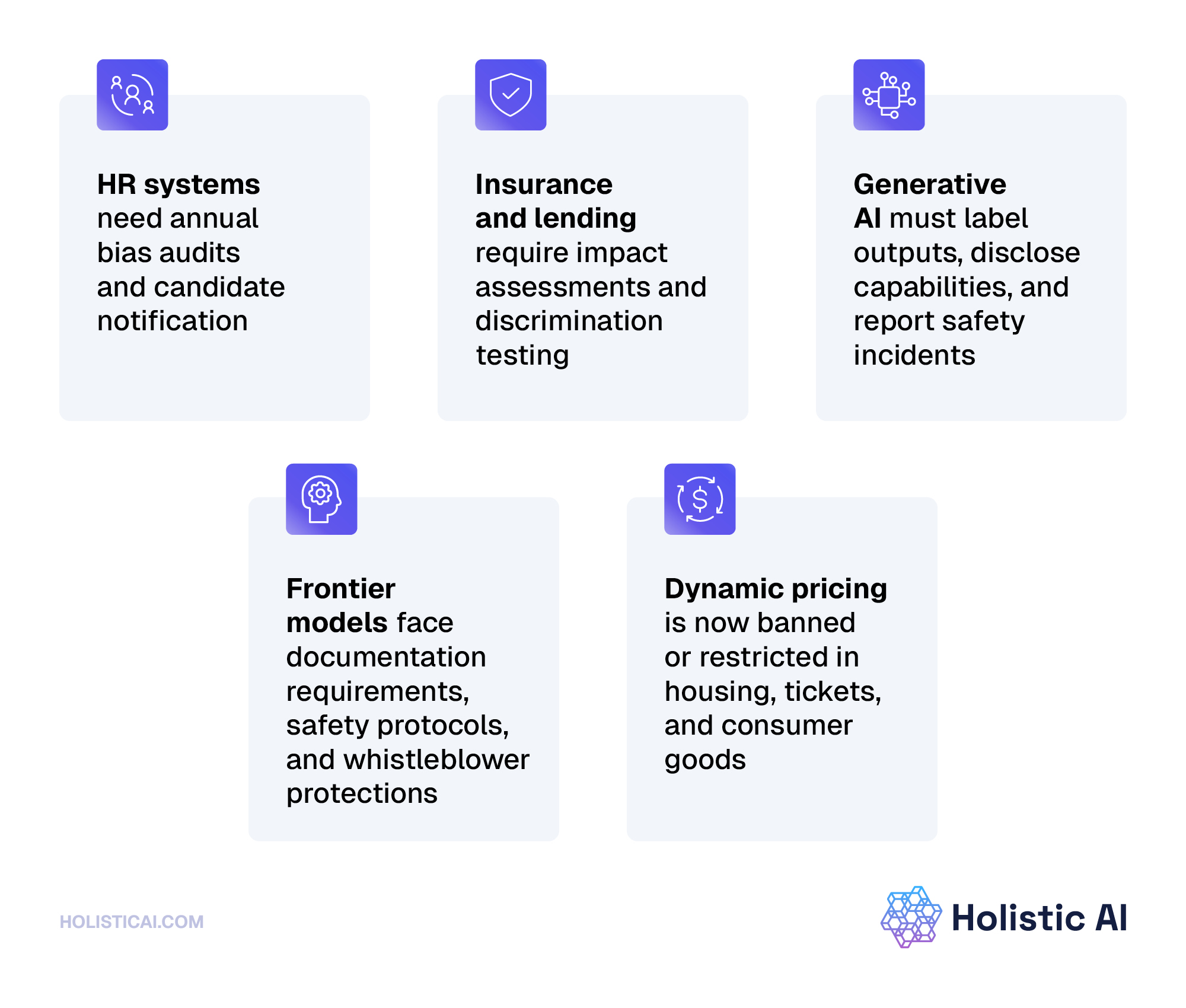

The scope is large and the regulations are complex:

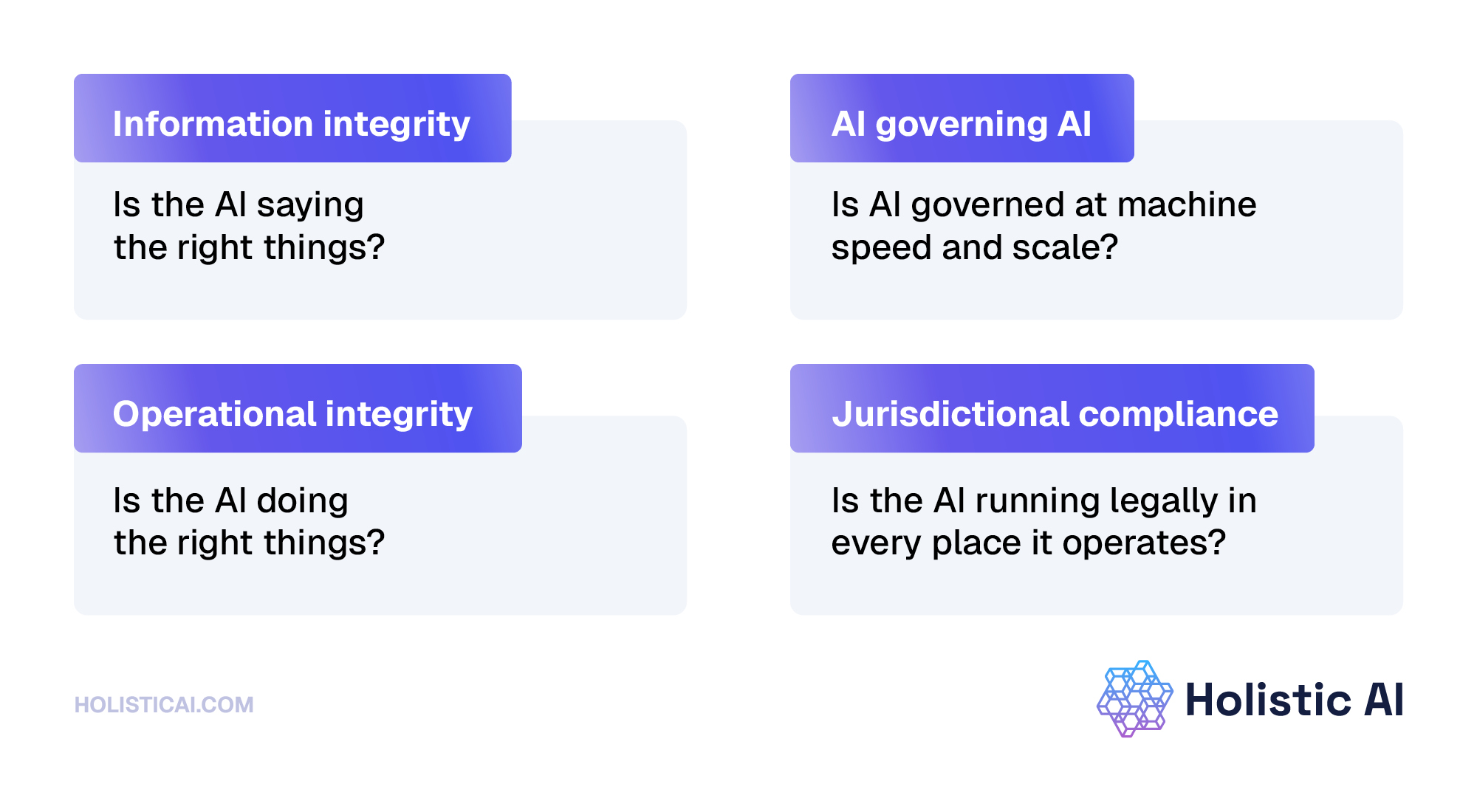

Different jurisdictions, different timelines—but the same operational questions:

- Where is AI running in your business?

- What decisions does it make?

- Who can stop it?

- How do you know when it fails?

- Can you prove any of this?

This is not about model cards or ethics statements. It's about logs, audits, human oversight procedures, and impact assessments. You’ll need to produce evidence when regulators ask.

Additionally, if your AI operates at machine speed, your governance must too. Human review still matters—but it cannot be the only control when systems run continuously across products, markets, and regulatory boundaries.

Autonomy without operational governance isn't innovation. It's regulatory debt, accumulating quietly until enforcement arrives.

The Takeaway

Governance in 2026 requires four things:

If you're deploying agents: Pick one in production. Ask your team how you'd know if it took an action it shouldn't have—and how long it would take to find out.

If you're using AI for decisions that matter: Ask where your highest-impact system is running (hiring, credit, insurance, pricing, etc.) and whether you can prove compliance with the appropriate regulations in every jurisdiction where it processes data or affects people.

If the answer to either question is "we're not sure," you've found your gap. We’re happy to help.

If you need guidance, please feel free to schedule a call here.