The was a time in the early days of the modern AI wave when concerns over the safe, responsible, and trustworthy use of AI could be easily mitigated by the notion of including a human in the loop.

Don’t worry. If something goes wrong, a human will be there to see it and fix it.

The concept of a human in the loop reassured regulators, executives, and risk teams that AI systems remained under human control. It promised accountability. Oversight. Safety by design.

But as AI systems have become larger, increasingly complex, and more embedded in the fabric of everyday decision making, the task of placing a human in the loop is no longer a realistic approach. In fact, it is rapidly becoming little more than a comforting myth.

The uncomfortable truth is that humans in the loop cannot govern modern AI systems at the speed, scale, or complexity with which they now operate. What’s more, treating a human in the loop as a governance strategy creates blind spots precisely where risk is highest.

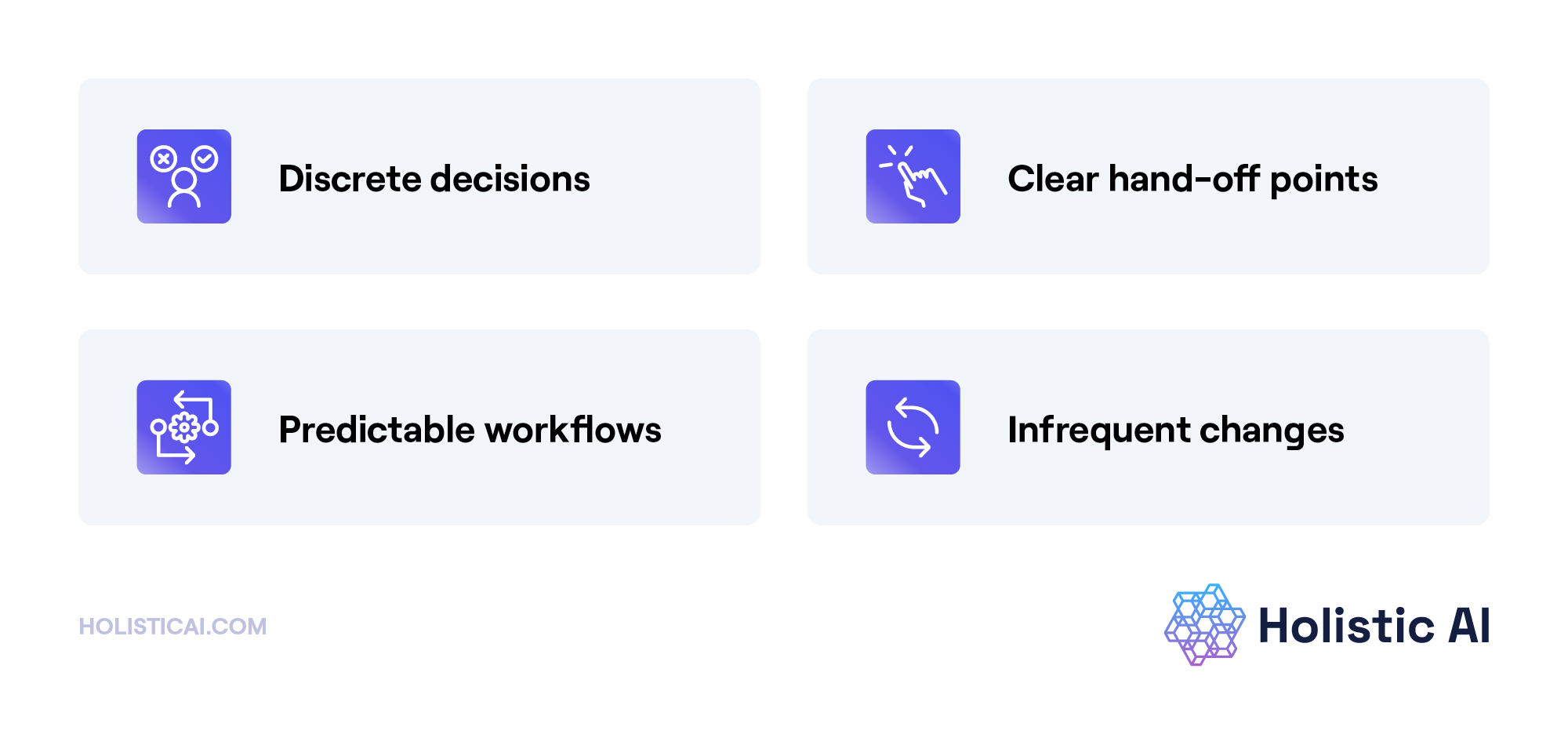

Human in the loop assumes that AI systems behave like traditional software, including:

For instance, Agentic AI systems, by contrast, violate all of these assumptions. Agentic AI systems plan and adapt over time. They decompose goals. Invoke tools. Generate and execute code. And they interact with other agents who are also adapting continuously.

In other words, there isn’t a meaningful moment that a human can step in and approve their actions without:

As a result, human-in-the-loop approvals become increasingly ceremonial over time since humans are approving abstractions, not run-time behavior.

Modern AI systems operate at machine speed. Governance mechanisms that require human review simply can’t keep up. And this mismatch is only getting worse over time.

Consider what is needed for the effective governance of agentic AI systems, including:

Humans simply can’t do this in real time or at scale. Sampling-based reviews risk missing catastrophic failures. Manual, after-the-fact reviews arrive too late. And static rules break down faster than they can be updated.

As AI systems operate increasingly autonomously, so too must governance.

AI governing AI can:

AI governance systems can reason over signals that humans simply can’t see—drift, tool misuse patterns, latent risk accumulation, and cross-agent feedback loops, etc.

The key to making this work is engineering governance as an AI control subsystem, instead of thinking of oversight as something manual that happens after the fact.

Removing humans from the execution workflow does not remove human accountability from the system. In fact, the role of humans in designing agentic AI systems that are safe, fair, and trustworthy is more critical than ever. From a system design standpoint humans need to:

In other words, as opposed to being in the loop, humans need to evolve to design the governance system. This isn’t something new. Humans have automated themselves out of the execution loop in other large, complex technical systems. For example, humans do not manually control packet routing, power grids, or financial markets during run time. Instead, they’ve designed controls, systems that monitor them continuously, and ways to intervene when systems alert an issue.

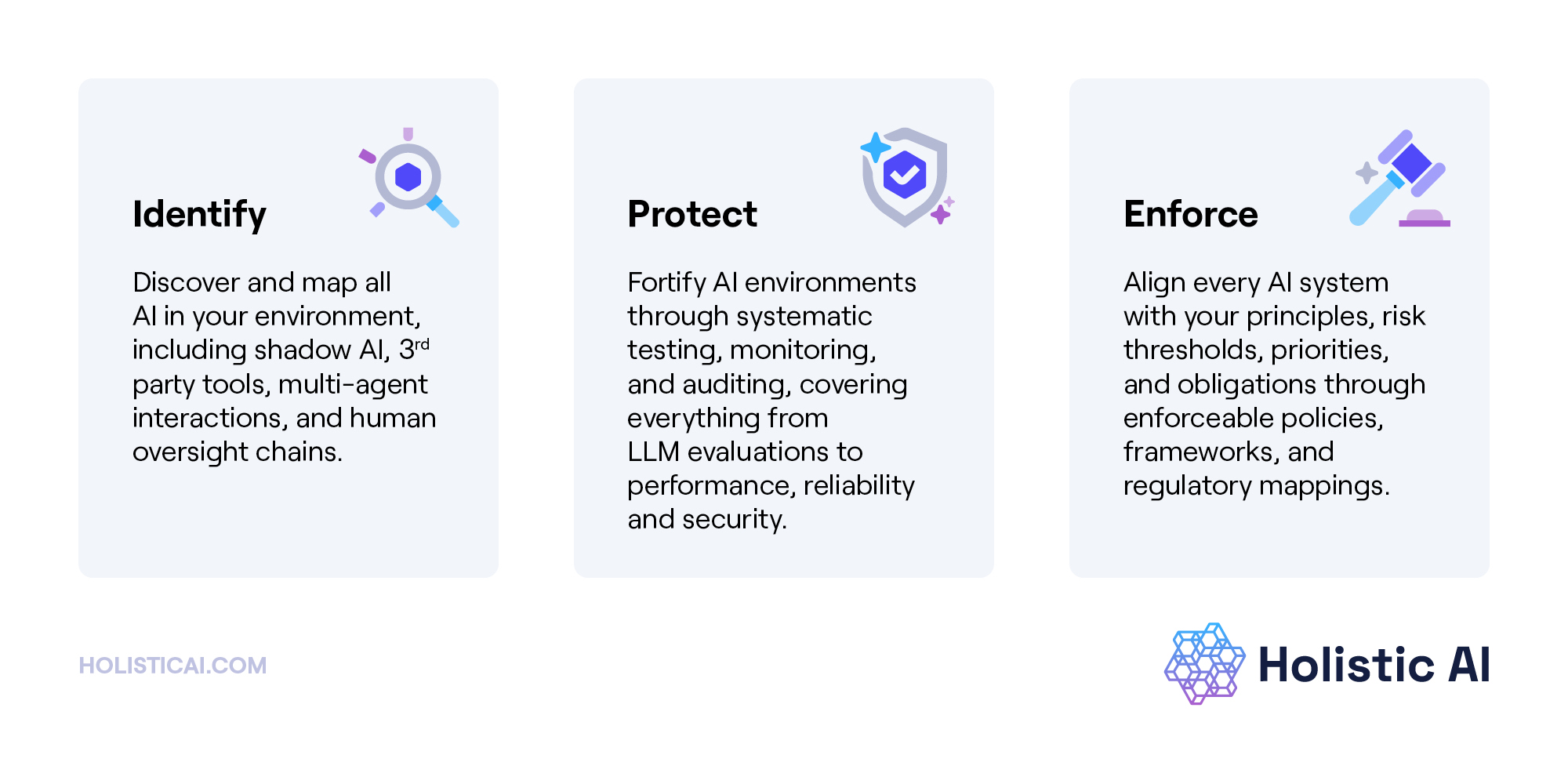

The Holistic AI Governance Platform provides continuous visibility, testing, monitoring, and enforceable controls across the AI lifecycle, especially as agents interact with tools, data, and people. It offers an end-to-end lifecycle oversight approach, helping organizations manage the complexity of modern AI systems automatically, responsibly, and efficiently.

With Holistic AI, teams gain the visibility, governance, and control needed to scale agentic AI with confidence. Holistic AI gives organizations the assurance to begin moving past outdated modes like human-in-the-loop and toward a more automated future, where AI safely governs AI.

Want to learn more? Schedule a demo.

Get a demo

Get a demo