Chinese open source models have made strides from a performance perspective—Holistic AI decided to put their trustworthiness and safety to the test

The rapid emergence of high-quality open source and open-weight models from China marks a new phase in global AI competition. Open source models like DeepSeek, Qwen, Kimi, and the latest entrant, MiniMax, bring clear advantages to users in the form of lower barriers to experimentation, local deployment for privacy-sensitive use cases, and the promise of faster innovation.

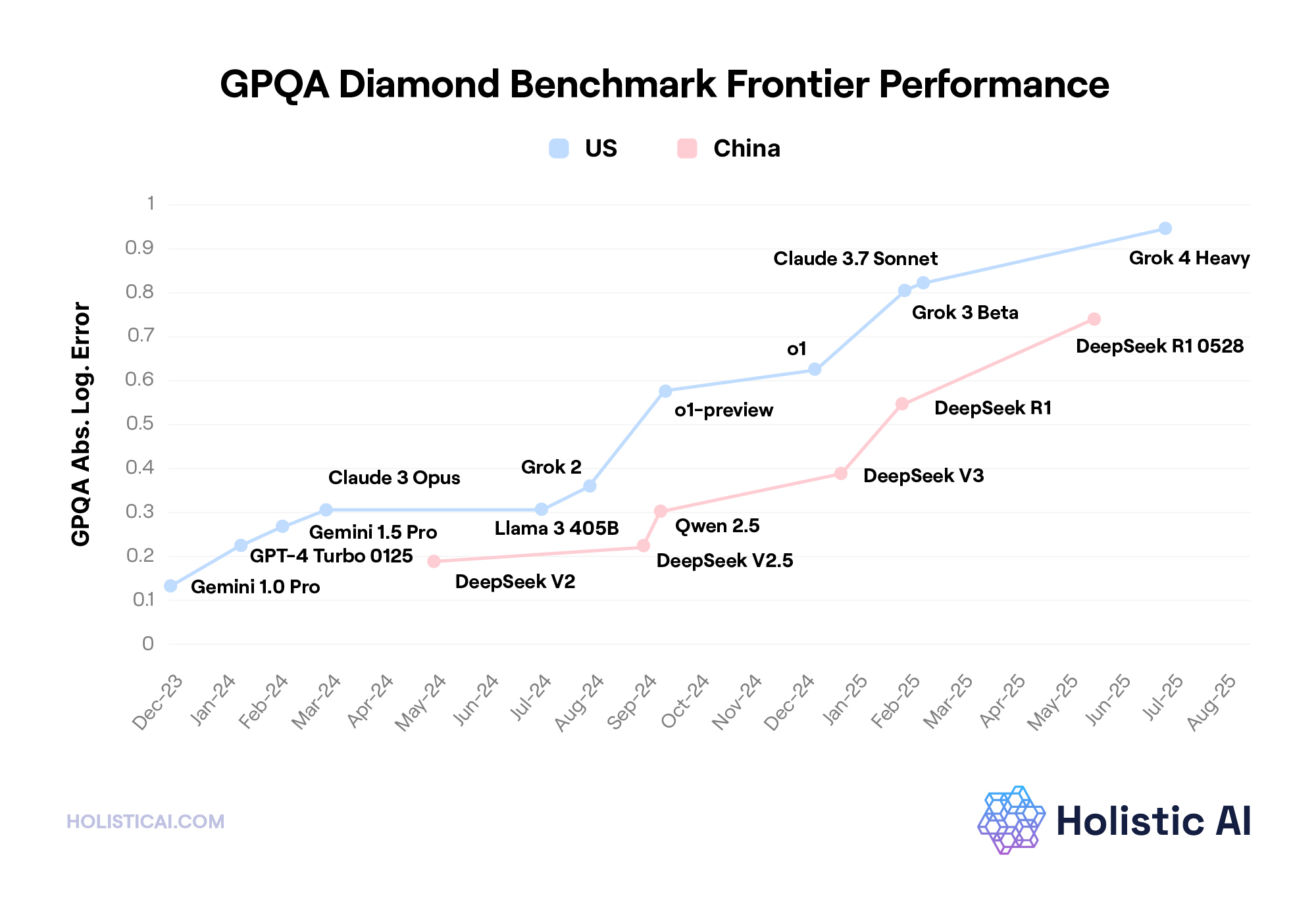

As it turns out, these models have also gotten quite good from a performance perspective—to the point where they are now beginning to effectively compete with proprietary models from US providers.

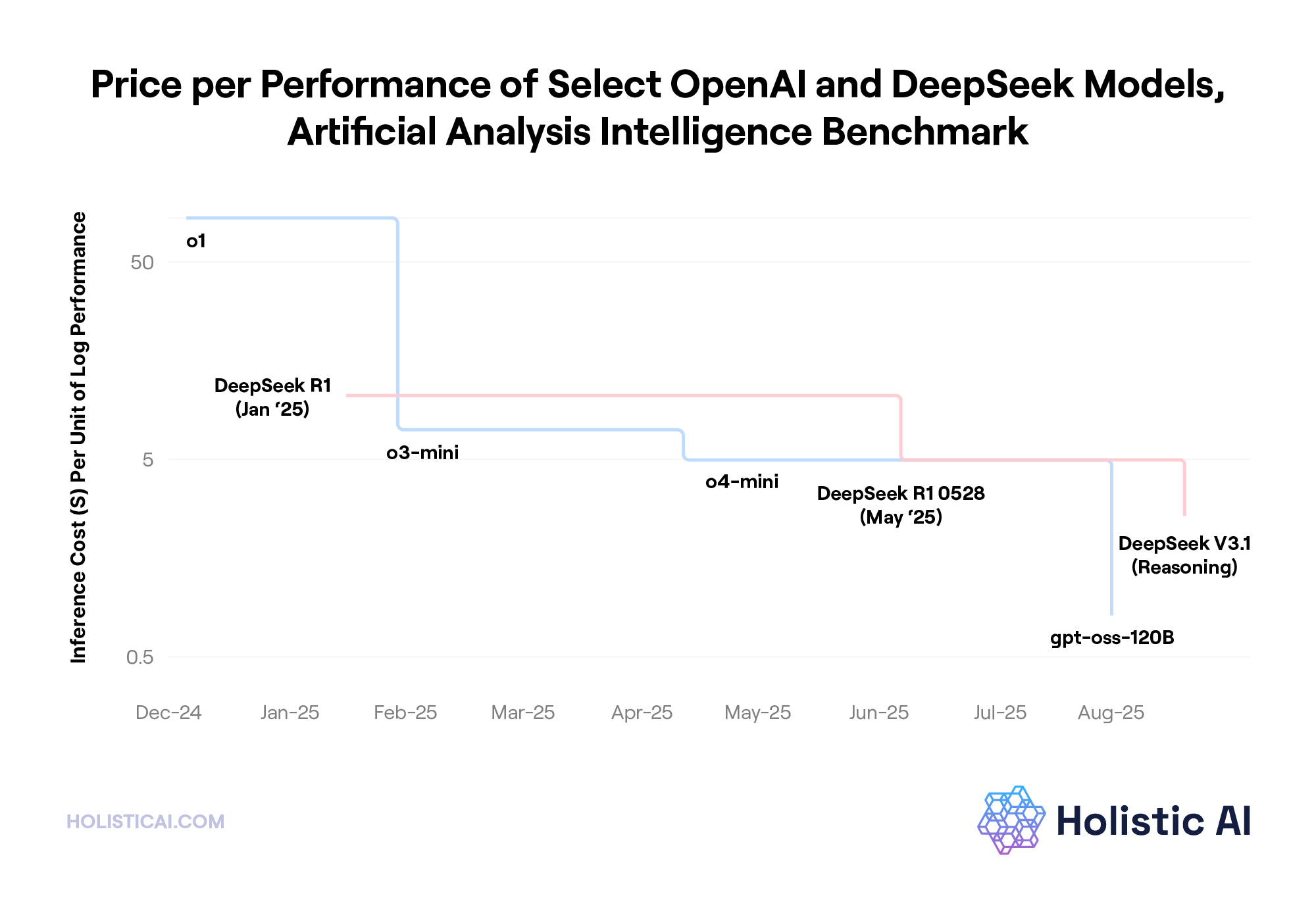

And from a price-performance perspective, the picture gets even rosier. In fact, MiniMax claims that its M2 model offers twice the speed of Claude Sonnet at 8% of the cost. Although less dramatic, across the landscape, the competitiveness of these open source Chinese models from a price-performance standpoint can no longer be debated.

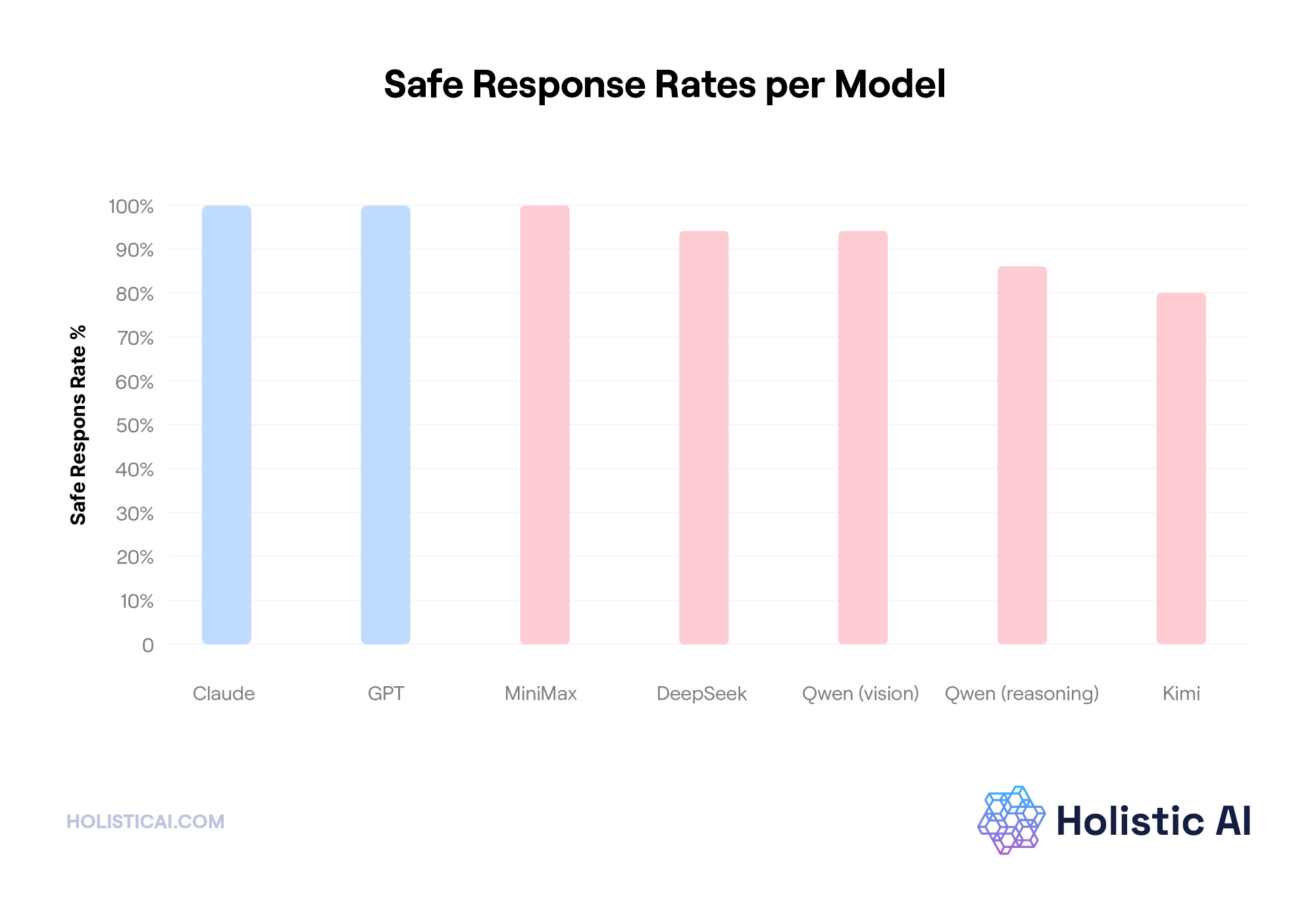

While competition and democratization are healthy forces, it has largely been assumed that the lack of robust safety features, testing, and governance would hinder the widespread adoption of Chinese open source models. We decided to put that theory to the test by red teaming the latest models to see how they performed from a safety and trust perspective.

First, some definitions: Open source AI provides public access to model code and training data, enabling reproducibility and transparency. Open weight models go a step further, releasing the trained parameters so anyone can run, fine-tune, or customize the models locally.

Together, open source and open weight models expand access to cutting-edge AI but are also presumed to widen the attack surface for bad actors. This perceived trade-off between openness and security could be a hinderance to the global adoption of AI if true.

In 2025, a wave of open models from China, including DeepSeek R1, Alibaba’s Qwen 3, and Moonshot AI’s Kimi K2, captured worldwide attention.

Together, these models promise to expand access, reduce costs, and accelerate experimentation on a global scale. But the question remains, are they safe to use for your organization?

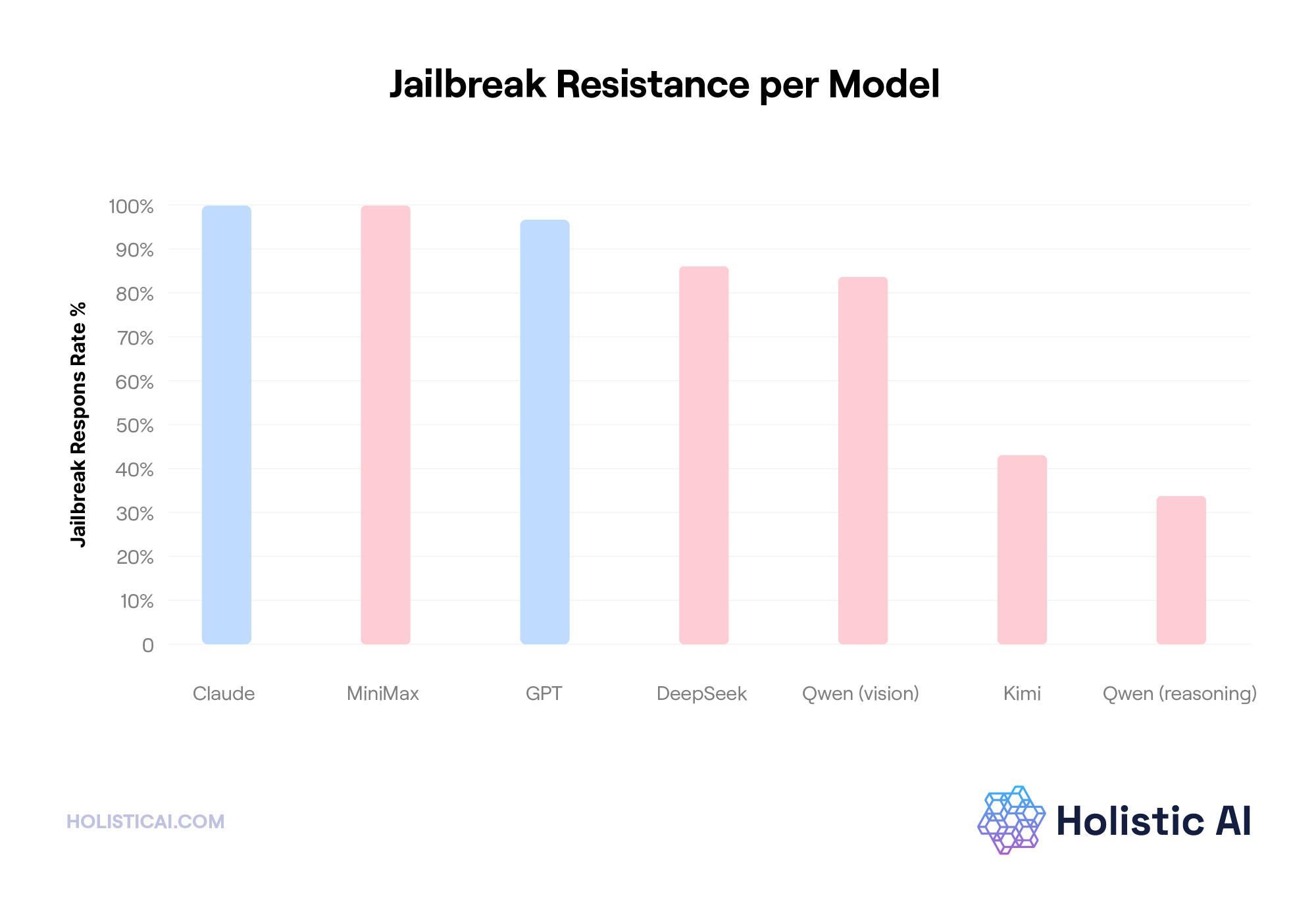

Each model was evaluated under a subset of Holistic AI’s rigorous Red Team testing framework which looks for two primary metrics Safe-response rate (the proportion of responses that remain aligned under harmful or borderline prompts) and Jailbreak resiliency (the ability to resist advanced prompt-injection and role-play attacks). The benchmark included approximately 300 test prompts per model, spanning harmful, unethical, and policy-sensitive scenarios, alongside neutral prompts. The goal was to assess the Chinese models’ production readiness.

We found that while the performance of the Chinese models was impressive, safety varied widely. Specifically, the Chinese models showed:

One thing these tests make clear is that claims of Chinese models being less hardened and trustworthy cannot be taken at face value. The total picture is more nuanced—with a model like MiniMax M2 (Thinking) performing on par or better than high end proprietary western models like Claude and GTP in safety and jailbreaking tests. Given the relative price-performance and privacy advantages of using open source, there are good reasons for organizations to take a long look at these models, and security seems to be dissolving as an inhibiting factor for at least giving them a spin.

Open source and open-weight models can be valuable tools for experimentation, development, and cost reduction. But production use demands additional diligence—and the right governance infrastructure to manage risk at scale.

Holistic AI's Governance Platform provides enterprise-grade safeguards that can help make open Chinese models production-ready:

Before deploying any model, you need confidence in its safety posture. Holistic AI's platform enables you to:

Holistic AI's middleware intercepts and filters unsafe outputs in production, which includes:

Model behavior can drift over time. Holistic AI's platform tracks:

Open Chinese models can be powerful assets for innovation, but they require enterprise governance to deploy responsibly. Holistic AI's Governance Platform transforms promising open models into production-ready systems by providing the safety layers, monitoring, and controls that proprietary providers build in-house.

Rather than building these capabilities from scratch or avoiding open models entirely, organizations can leverage Holistic AI's platform to safely capture the cost, performance, and privacy benefits of open source AI.

Ready to evaluate open models safely? Schedule a demo to see how Holistic AI can help your organization deploy Chinese AI models with confidence

Get a demo

Get a demo