Unlike traditional AI models that generate single responses to prompts, agents can autonomously complete tasks through iterative cycles of reasoning, action, and interaction with external systems. They execute multi-step workflows, leverage tools, and adapt in real-time as conditions change - all on behalf of users. This autonomy enables them to accomplish complex tasks independently, but it also introduces new categories of risk

As organizations move agentic systems from prototype to production, they encounter new challenges. Testing no longer ends with a prompt and an output. It now extends to reasoning chains, tool selection, multi-step decisions, fallbacks, failure modes, and observability under uncertainty. Oversight must evolve accordingly.

These issues sit at the center of this year’s Great Agent Hack and why Holistic AI and UCL are bringing together hundreds of researchers, engineers, and students at UCL East Campus for a two-day challenge (November 15-16) focused on three governance challenges with agents: performance, transparency, and safety.

Across industries, teams often assume they must choose between these dimensions:

Some level of balancing is always required, but the idea that organizations must fully sacrifice one dimension to maintain the others is in many ways a false trade-off. Instead, with the right infrastructure and testing methods, performance, transparency, and safety can reinforce each other instead of competing.

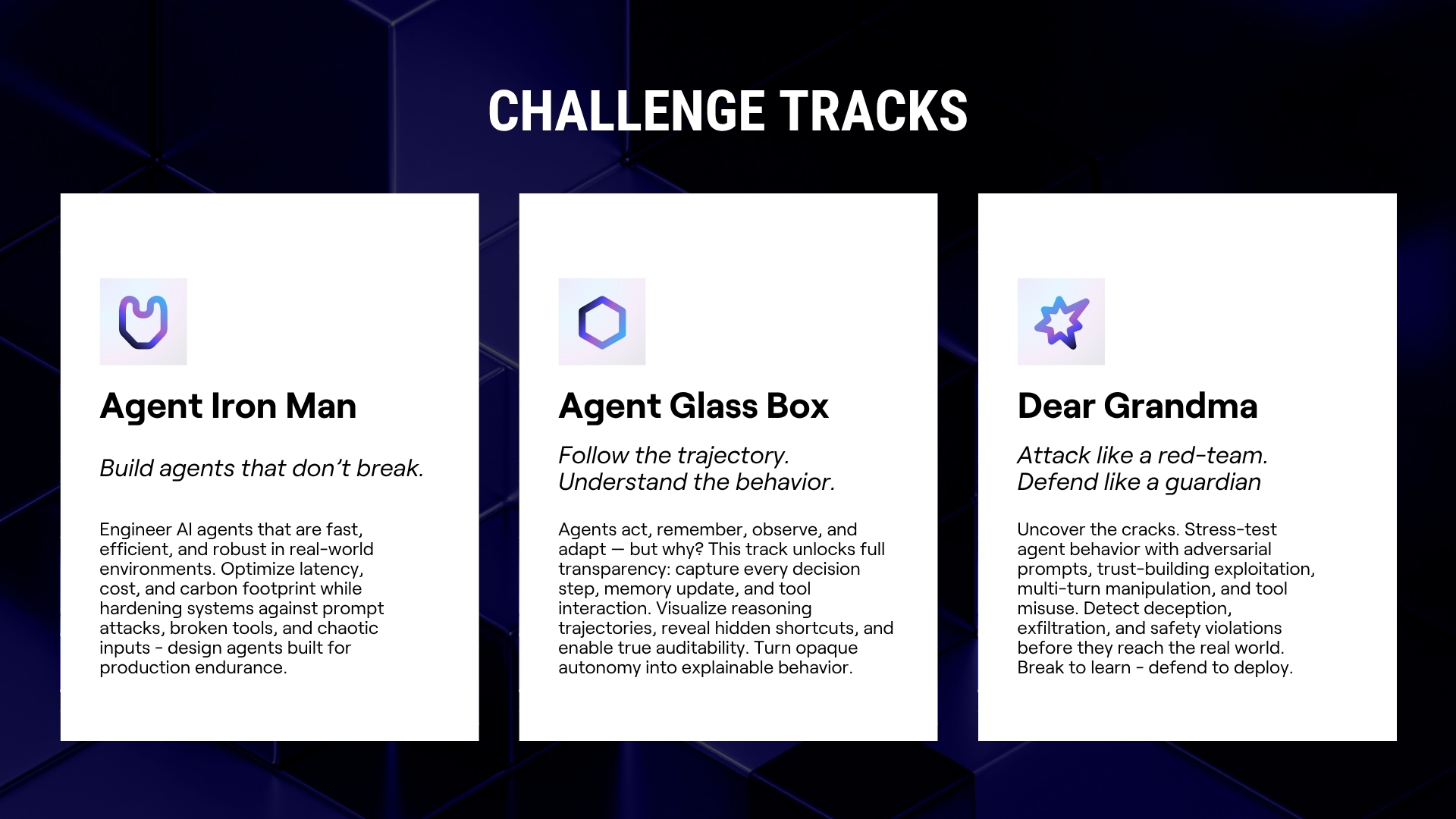

This year’s hackathon is designed to explore how to build agents that operate reliably in real environments while maintaining performance, safety, and observability.

Agents mark a shift from discrete outputs to autonomous action. They can reason through steps, choose tools, navigate ambiguity, and adapt based on feedback. This unlocks powerful applications, but it also demands new methods of evaluation.

Agents require testing that captures:

Performance, transparency, and safety form the core dimensions of responsible and effective agent design. The hackathon helps optimize all three of these pillars across agentic systems.

Key question: Can agents remain efficient, responsive, and reliable when conditions aren’t ideal?

In real world scenarios, agents encounter API delays, unexpected inputs, tool failures, and cost constraints. This track challenges teams to design agents that manage these realities without losing stability or control.

Key question: How can we make an agent’s reasoning and decision paths observable and auditable?

Agents make numerous internal choices that can remain hidden behind a multi-step decision tree. For high-stakes or regulated deployments, organizations must understand why an agent acted the way it did. This track encourages teams to design agents that surface their reasoning, transitions, memory updates, and explanations.

Key question: How do we identify and mitigate misaligned or unsafe agent behavior before deployment?

Agents can exhibit vulnerabilities like prompt injection, manipulation, ambiguous tool use, or unintended autonomy that only emerge across multi-step interactions: . This track focuses on probing those weaknesses and strengthening detection and correction mechanisms.

Events like this help deepen our understanding of how agents behave in practice. They surface new problem patterns, reveal where current testing methods succeed or fall short, and highlight edge cases that matter for real-world deployment. They also create opportunities for collaboration and shared learning among people tackling similar challenges from different angles.

Agentic AI is advancing quickly. As agents become more capable, organizations need governance approaches designed for systems that act, adapt, and make decisions across multiple steps. Performance, transparency, and safety aren’t competing priorities; they are structural requirements for deploying agents responsibly and effectively. The Great Agent Hack brings researchers and builders together to advance AI, push the state of the art, and help organizations innovate faster with fewer constraints, without compromising on safety.

15–16 November, UCL East Campus

Two days. Three tracks.

Hundreds of participants pushing the frontier of agentic AI.

Learn more and register → https://hackathon.holisticai.com

Space is limited.

Get a demo

Get a demo